MDS Newsletter #70

🚨Attention Data Folks🚨

Get ready for the ultimate data party on 2nd March at Huckletree, London as Modern Data Stack is hosting its first-ever event 🔥🧡 The Modern Data Summit’23 in partnership with Cocoa

We've got a jam-packed agenda filled with insightful talks, interactive sessions, and networking opportunities And the best part? We'll be announcing our amazing lineup of speakers soon, so stay tuned for some big names in the data world. 🤩

Don't miss out on this opportunity to learn, connect, and have fun. See you at the Modern Data Summit! 🎉

Don't miss out on this opportunity to learn the latest trends in data and connect with your data peers. Grab your free spot now, before they're all gone! 💥

Featured tools of the week

- Collibra: is a data intelligence solution provider. It offers a cloud-based platform that connects IT and the business to build a data-based culture for the digital enterprise. Collibra removes the complexity of data management to give you the perfect balance between powerful analytics and ease of use. Its platform unlocks your data to solve problems, implement ideas and grow your business.

Collibra has raised a total of $596.5M in funding over 9 rounds. Their latest funding was raised on Jan 11, 2022, from a Venture - Series Unknown round. - Amperity: Amperity is the leading customer data platform (CDP) provider that helps companies put data to work to improve marketing performance, build long-term customer loyalty and drive revenue.

Amperity has raised a total of $187M in funding over 5 rounds. Their latest funding was raised on Jul 13, 2021, from a Series D round.

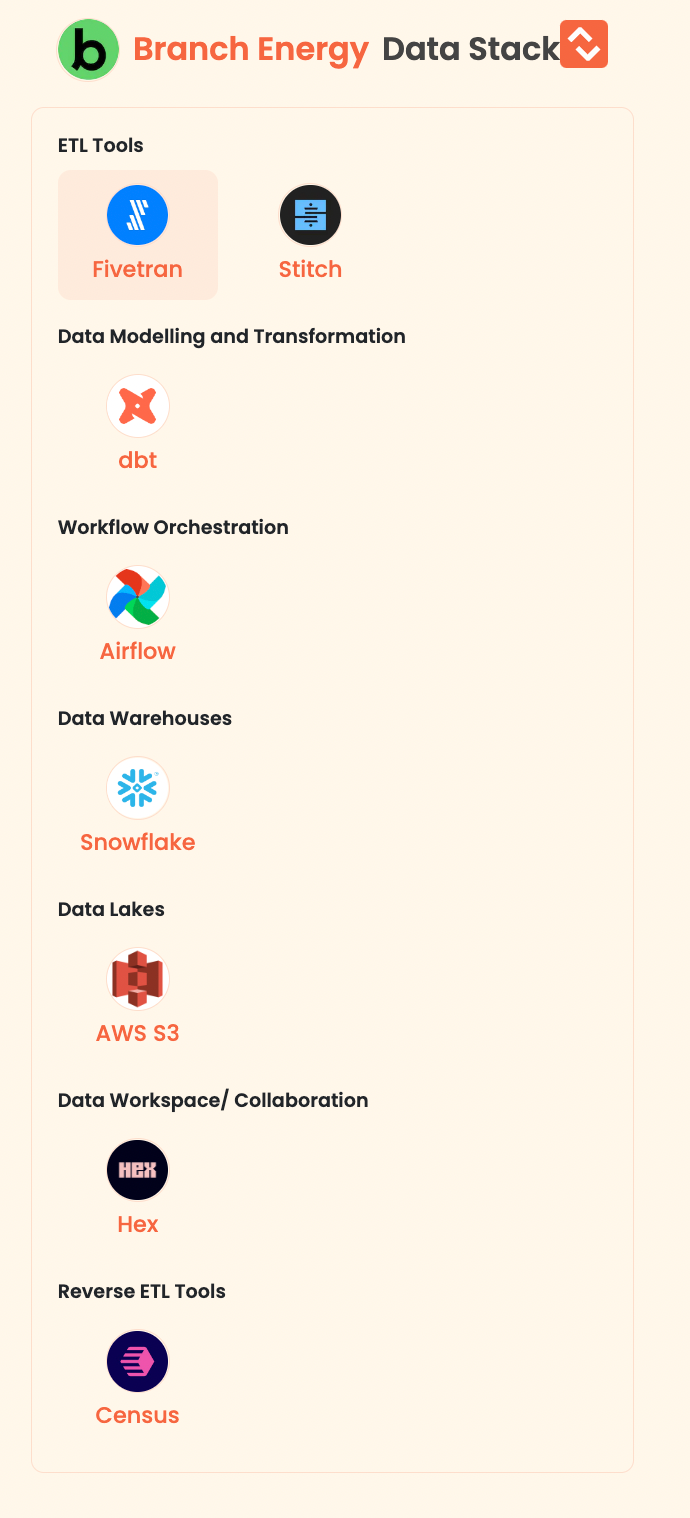

Featured stack of the week

- Branch Energy: Branch Energy is a technology company that makes it easy for customers to lower their energy bills and carbon footprint while providing dramatically better customer experiences than incumbents.

Here are the data tools of Branch Energy:

Good reads and resources

- Introduction to Incremental ETL in Databricks: Incremental ETL refers to the ability to process only new or changed data since the last ingestion. This helps to achieve scalability by not re-processing data that has already been processed. It also requires idempotency, meaning that the same data always results in the same outcome, which is tricky to achieve and requires careful maintenance of the state. Traditionally, implementing proper incremental ETL required a lot of setup and maintenance.

In Databricks, features such as COPY INTO and AutoLoader abstract the complexities of incremental ETL and provide a robust and easy-to-use way to process only new or updated files. The COPY INTO command is a SQL command that performs the incremental ETL by loading data from a folder in cloud object storage into a Delta table and doesn't require custom bookkeeping. It is well-suited for scheduled or on-demand ingestion use cases and can be used in both SQL and notebooks.

By Oindrila Chakraborty - Data Mesh — A Data Movement and Processing Platform @ Netflix: The article is about Data Mesh, a data movement and processing platform developed by Netflix. The platform, which is a next-generation data pipeline solution, is designed to handle not only Change Data Capture (CDC) use cases but also more general data movement and processing use cases. The system can be divided into a control plane (Data Mesh Controller) and a data plane (Data Mesh Pipeline). The controller receives user requests and deploys and orchestrates pipelines, while the pipeline performs the actual data processing work.

Data Mesh can read data from various sources, apply transformations on the incoming events and eventually sink them into the destination data store. The platform can be created from the UI or via a declarative API. The article also mentions that Data Mesh has expanded its scope to handle more generic applications, an increased catalog of DB connectors, and more processing patterns.

By Bo LEI, Guil Pires, Yufeng (James) SHAO, Kasturi Chatterjee, Sujay Jain, and Vlad Sydorenko 🇺🇦

MDS Journal

- What is Data observability, do I need it? : This article is written by Jatin Solanki who discusses the challenges that companies face in ensuring data reliability and the need for a solution that is easy to use, understand, and deploy, and not heavy on investment. He argues that the foundation for data reliability begins with a reliable data source or defining source of truth and suggests implementing checks such as volume, freshness, schema change, distribution, lineage, and reconciliation to ensure data reliability. He also addresses the common question of "Build versus Buy" and suggests that while he is a fan of open-source technology, in some critical modules, it may be more efficient to buy an out-of-the-box solution that is scalable and already tested in the market.

- 10 tips for building an advanced data platform: This article is written by Kirill Denisenko who provides tips for building an advanced data platform, including building the platform as a product, using an asset-based approach to data, managing the infrastructure as code, setting limits and partitioning/clustering tables, choosing between SaaS, open-source solutions or building your own, creating an internal data catalog, monitoring center, keeping things simple for users and periodically checking data inventory. He also mentions his company's experience with migrating to a new data platform and the lessons they learned during the process.

If you also have an interesting blog that you would like us to share with the data community, submit it here.

MDS Jobs

- The Movement Cooperative is hiring Data Engineer

Location: Remote

Stack: dbt

Apply here - Wahoo Fitness is hiring Data Engineer + BI Analyst

Location: Remote

Stack: AWS, Redshift, dbt,

Apply here - The Farmer’s Dog is hiring Sr. Data Engineer

Location: Remote

Stack: Airflow, Fivetran, dbt,

Apply here

🔥 Trending on Twitter

Just for fun 😀

Subscribe to our Newsletter, Follow us on Twitter and LinkedIn, and never miss data updates again.

What do you think about our weekly Newsletter?

Love it | It's great | Good | Okay-ish | Meh

If you have any suggestions, want us to feature an article, or list a data engineering job, hit us up! We would love to include it in our next edition😎

About Moderndatastack.xyz - We're building a platform to bring together people in the data community to learn everything about building and operating a Modern Data Stack. It's pretty cool - do check it out :)